Cloud Proxy is a required component in vRealize Operations Manager if you use Telegraf agents to monitor operating systems or applications. However, I can see that many people don’t know what to do if their Cloud Proxies or Telegraf agents do not work correctly. That post describes basic commands, files location, etc., to help you solve the problems with Cloud Proxy and/or Telegraf agents.

Check Cloud Proxy to vROPS connectivity and/or upgrade status:

Open an SSH session to Cloud Proxy. Log in on the root account and type the command:

cprc-cli -sThe output should be similar to that:

root@vrops-proxy [ ~ ]# cprc-cli -s

-----------------------------------------------------------------------

CPRC deployment and connection configurations

-----------------------------------------------------------------------

Name: vrops-proxy.blanketvm.com

Version: 8 6.2.19081813

IP: 10.20.40.54

Deployed: 1/4/2022

Connected to vRops cloud: 1/1/2022

NTP server(s): [['ntp.blanketvm.com']]

-----------------------------------------------------------------------

Proxy Server Configurations

-----------------------------------------------------------------------

Proxy Server IP: []

Proxy Server Port: []

Proxy Server User: []

SSL Enabled: []

SSL Verification Enabled: []

-----------------------------------------------------------------------

Load Balancer Configurations

-----------------------------------------------------------------------

Load Balancer IP: []

-----------------------------------------------------------------------

Health checking

-----------------------------------------------------------------------

Ping to vROps cloud at: [2022-01-04 11:55:18.439] status: [SUCCESS]

Last success ping at: [2022-01-04 11:55:18.439]

-----------------------------------------------------------------------

Product Upgrade

-----------------------------------------------------------------------

Automated upgrade enabled: [True]

Status: [NONE]

Product PAK: []

Started: []

Download status: [NONE]

Download start: []

Downloaded PAK size: [] MB

Download end: []

-----------------------------------------------------------------------

Product Latest Upgrade

-----------------------------------------------------------------------

Product PAK: [vRealize-Operations-Cloud-Proxy-86219081813]

Status: [SUCCESS]

Type: [AUTOMATED]

Started: [2022-01-03 11:07:12.352]

Completed: [2022-01-03 11:11:37]

Extract status: [SUCCESS]

Download status: [SUCCESS]

Upgrade system reboot at: [2022-01-03 11:11:37]

Cloud Proxy log files location:

Logs directory: /storage/log/vcops/log

The most important log files:

– Casa log: /storage/log/vcops/log/casa/casa.log

– Collector log: /storage/log/vcops/log/collector.log

Changing network settings on Cloud Proxy:

Run the command:

/opt/vmware/share/vami/vami_config_netThe output should be similar to that:

Main Menu

0) Show Current Configuration (scroll with Shift-PgUp/PgDown)

1) Exit this program

2) Default Gateway

3) Hostname

4) DNS

5) Proxy Server

6) IP Address Allocation for eth0

Enter a menu number [0]:

https://kb.vmware.com/s/article/2115840

How to manually upgrade a vRealize Operations Cloud Proxy node via CLI:

https://kb.vmware.com/s/article/80590

Check connectivity from Telegraf agent to Cloud Proxy:

Verify if the control plane is working:

root@vrops-proxy [ ~ ]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a9e4c125d7a6 ucp-docker-local.artifactory.eng.vmware.com/salt-master-bootstrap:8.6.0.96 "/bin/sh -c '/tmp/sa…" 25 hours ago Up 4 hours 0.0.0.0:4505-4506->4505-4506/tcp, 0.0.0.0:8553->8553/tcp ucp-controlplane-saltmaster

If the control plane is not working, run the command:

docker restart ucp-controlplane-saltmaster

If the control plane is working fine, connect to the container and verify Telegraf agent connectivity:

root@vrops-proxy [ ~ ]# docker exec -it ucp-controlplane-saltmaster bash

root [ / ]# salt '*' test.ping

e02a74f3-9cb7-3dab-b61c-29dea61a1230_vm-4025:

True

e02a74f1-9cb7-3dab-b61c-29dea61a1230_vm-4024:

True

e02a74f4-9cb7-4dab-b61c-29dea61a1230_vm-165025:

Minion did not return. [Not connected]

e02a74f4-9cb7-4dab-b61c-29dea61a1230_vm-165026:

Minion did not return. [Not connected]

e02a74f4-9cb7-4dab-b61c-29dea61a1230_vm-109023:

Minion did not return. [Not connected]

ERROR: Minions returned with non-zero exit code

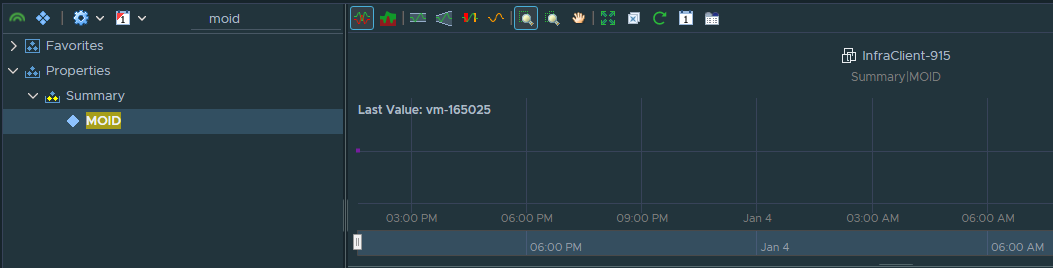

Here we can see that some agents are not reachable. Unfortunately, we do not have virtual machine names, but to decode that number, we can use the vROPS! If you check Properties->Summary-MOID on VM, you will see that ID. You can create a View in vROPS that contains VM names and MOID and check that quickly.

Check Telegraf agent services:

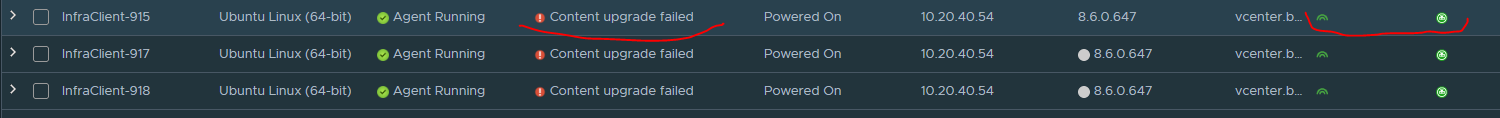

Let’s keep focus on vm-165025 (InfraClient-915). As you can see, the agent is not connected to the control plane. Check services on the client virtual machine (Ubuntu OS in my example):

root@InfraClient-915:~# service ucp-minion status

● ucp-minion.service - VMware Application Remote Collector Minion

Loaded: loaded (/lib/systemd/system/ucp-minion.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2022-01-04 09:11:32 CET; 4h 40min ago

Main PID: 810 (python)

Tasks: 2 (limit: 1070)

Memory: 42.0M

CGroup: /system.slice/ucp-minion.service

└─810 /opt/vmware/ucp/dist/python/bin/python /opt/vmware/ucp/ucp-minion/bin/ucp-minion --config=/opt/vmware/ucp/salt-minion/etc/salt/grains

...

root@InfraClient-915:~# service ucp-telegraf status

● ucp-telegraf.service - The plugin-driven server agent for reporting metrics into InfluxDB

Loaded: loaded (/lib/systemd/system/ucp-telegraf.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2022-01-04 09:16:36 CET; 4h 35min ago

Docs: https://github.com/influxdata/telegraf

Main PID: 1355 (telegraf)

Tasks: 8 (limit: 1070)

Memory: 32.1M

CGroup: /system.slice/ucp-telegraf.service

└─1355 /opt/vmware/ucp/ucp-telegraf/usr/bin/telegraf -config /opt/vmware/ucp/ucp-telegraf/etc/telegraf/telegraf.conf -config-directory /opt/vmware/ucp/ucp-telegraf/etc/telegra>

...skipping...

● ucp-telegraf.service - The plugin-driven server agent for reporting metrics into InfluxDB

Loaded: loaded (/lib/systemd/system/ucp-telegraf.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2022-01-04 09:16:36 CET; 4h 35min ago

Docs: https://github.com/influxdata/telegraf

Main PID: 1355 (telegraf)

Tasks: 8 (limit: 1070)

Memory: 32.1M

CGroup: /system.slice/ucp-telegraf.service

└─1355 /opt/vmware/ucp/ucp-telegraf/usr/bin/telegraf -config /opt/vmware/ucp/ucp-telegraf/etc/telegraf/telegraf.conf -config-directory /opt/vmware/ucp/ucp-telegraf/etc/telegra>

...

root@InfraClient-915:~# service ucp-salt-minion status

● ucp-salt-minion.service - Salt-Minion in a VirtualEnv

Loaded: loaded (/lib/systemd/system/ucp-salt-minion.service; disabled; vendor preset: enabled)

Active: inactive (dead)

We can see that the ucp-salt-minion service is not working. The most common effect of that situation (ucp-minion and ucp-telegraf are working, ucp-salt-minion not) is that we can see all agent metrics in vROPS, but we cannot manage agents. For example, upgrade them.

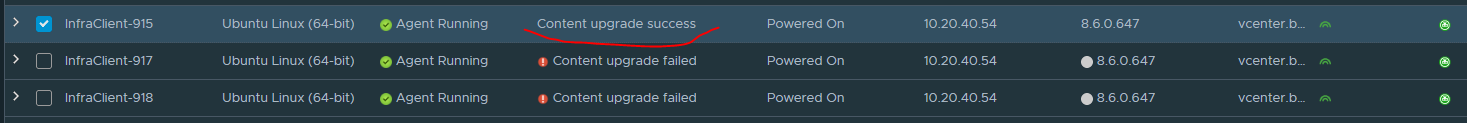

Start ucp-salt-minion service and verify connectivity:

root@InfraClient-915:~# service ucp-salt-minion start

root@InfraClient-915:~# service ucp-salt-minion status

● ucp-salt-minion.service - Salt-Minion in a VirtualEnv

Loaded: loaded (/lib/systemd/system/ucp-salt-minion.service; disabled; vendor preset: enabled)

Active: active (running) since Tue 2022-01-04 14:04:38 CET; 10s ago

Process: 10586 ExecStart=/etc/init.d/ucp-salt-minion start (code=exited, status=0/SUCCESS)

Tasks: 3 (limit: 1070)

Memory: 61.0M

CGroup: /system.slice/ucp-salt-minion.service

└─10613 /opt/vmware/ucp/salt-minion/bin/python /opt/vmware/ucp/salt-minion/bin/salt-minion -c /opt/vmware/ucp/salt-minion/etc/salt -d

--------------------------------------------------------------------------------------------------------------------------------------------------

root@vrops-proxy [ ~ ]# docker exec -it ucp-controlplane-saltmaster bash

root [ / ]# salt '*' ping

e02a74f3-9cb7-3dab-b61c-29dea61a1230_vm-4025:

True

e02a74f1-9cb7-3dab-b61c-29dea61a1230_vm-4024:

True

e02a74f4-9cb7-4dab-b61c-29dea61a1230_vm-165025:

True

More useful things:

Telegraf agent files can be found (default):

– Windows: C:\VMware\UCP

– Linux: /opt/vmware/ucp

Configuration files (grains and minions):

– Windows: C:\VMware\UCP\salt\conf\grains

– Windows: C:\VMware\UCP\salt\conf\minions

– Linux: /opt/vmware/ucp/salt-minion/etc/salt/grains

– Linux: /opt/vmware/ucp/salt-minion/etc/salt/minions

Example files:

root@InfraClient-915:/opt/vmware/ucp/salt-minion/etc/salt# cat grains

add_agent_run_user_and_group: true

add_sudo_perms_for_agent_run_user: true

added_agent_run_user_and_group: true

added_sudo_perms_for_agent_run_user: true

arc_fqdn: vrops-proxy.blanketvm.com

ca_file: ca.pem

cert_file: cert.pem

certs_dir: /opt/vmware/ucp/certkeys

compatibility_error_msg: Recommended version(s) for Ubuntu Linux (64-bit):17.x,18.x.

configured_plugins_collection_interval_secs: 300

connections_discovery_collection_interval_secs: -1

discovery_collection_interval_secs: 300

dl_url: https://vrops-proxy.blanketvm.com:443/downloads/salt

emqtt_https_server_publish_path: /mqtt/publish

emqtt_https_server_url: https://vrops-proxy.blanketvm.com:8884

emqtt_server: vrops-proxy.blanketvm.com:8883

https_metric_publish_path: https://vrops-proxy.blanketvm.com/arc/metric

https_opr_publish_path: https://vrops-proxy.blanketvm.com/arc/control

https_server_url: https://vrops-proxy.blanketvm.com

ip_discovery_collection_interval_secs: -1

job: install

key_file: key.pem

max.bootstrap.time.seconds: 600

max_message_size_in_kb: 60

mep_status_collection_interval_secs: 300

monitor_agents_interval_secs: 300

monitoring_agent_collection_interval: 300s

mqtt_conn_type: persistent

os_type: Unix

prerequisite_check_ports: 443,4505,4506

prerequisite_check_unix_commands: /bin/bash,sudo,tar,awk,curl,chmod,chown,cat,useradd,groupadd,userdel,groupdel

process_discovery_collection_interval_secs: -1

remotedir: /tmp/vmware-root_888-2730562489/VMware-UCP_Bootstrap_Scriptsvmware60

salt_master_fqdn: vrops-proxy.blanketvm.com

salt_minion_dir: /opt/vmware/ucp/salt-minion

salt_minion_sha256: 5e764e0acca3bfac161f3cf893bfda17b54d8fc52f32faefe907bac56bb63446

ucp_minion_cmd: /opt/vmware/ucp/ucp-minion/bin/ucp-minion.sh --config=/opt/vmware/ucp/salt-minion/etc/salt/grains

ucp_minion_log_path: /opt/vmware/ucp/ucp-minion/var/log/ucp-minion.log

ucp_uaf_agent_run_group: arcgroup

ucp_uaf_agent_run_user: arcuser

ucp_uaf_agents_registry_path: /opt/vmware/ucp/uaf/agents-registry.json

ucp_uaf_configured_plugins_dir: /opt/vmware/ucp/plugins

ucp_uaf_configured_plugins_json_path: /opt/vmware/ucp/plugins/telegraf

ucp_uaf_install_dir: /opt/vmware/ucp

ucp_uaf_install_dir_for_telegraf_conf: /opt/vmware/ucp

ucp_uaf_registry_dir: /opt/vmware/ucp/uaf

ucp_uaf_sd_svc_signatures_path: /opt/vmware/ucp/uaf/sd-svc-signatures.yaml

ucp_uaf_telegraf_conf_path: /opt/vmware/ucp/ucp-telegraf/etc/telegraf/telegraf.conf

ucp_uaf_telegraf_plugin_error_log: /opt/vmware/ucp/ucp-telegraf/var/log/PluginErrorDetails.log

vm_id: vm-165025

vm_name: InfraClient-915

vm_status_lock: null

----------------------------------------------------------------------------------------------------------------

root@InfraClient-915:/opt/vmware/ucp/salt-minion/etc/salt# cat minion

master: vrops-proxy.blanketvm.com

id: e02a74f4-9cb7-4dab-b61c-29dea61a1230_vm-165025

# The root directory prepended to these options: pki_dir, cachedir,

# sock_dir, log_file, autosign_file, autoreject_file, extension_modules,

# key_logfile, pidfile:

root_dir: /opt/vmware/ucp/salt-minion

# in seconds

random_reauth_delay: 180

# in milliseconds

recon_default: 10000

# in milliseconds

recon_max: 170000

# The user to run salt.

user: arcuser

# The user to run salt remote execution commands as via sudo. If this option is

# enabled then sudo will be used to change the active user executing the remote

# command. If enabled the user will need to be allowed access via the sudoers

# file for the user that the salt minion is configured to run as. The most

# common option would be to use the root user. If this option is set the user

# option should also be set to a non-root user. If migrating from a root minion

# to a non root minion the minion cache should be cleared and the minion pki

# directory will need to be changed to the ownership of the new user.

#sudo_user: {UCP_AGENT_RUN_USER}

####BEGIN For Customized Configs####

multiprocessing: False

####END For Customized Configs####

Summary

Stay tuned!

Hi Pawel,

Great post! Very informative.

I have a issue with one some VMs that fails to install the Telegraf agent, the error is “connect to salt master”.

Any ideas on what this error may be? I tried looking through the Telegraf agent logs on the server but cannot find anything relevant to the error. I am using vRealize Operations 8.6.2

LikeLike

Did you check network connectivity between Client and Cloud Proxy?

You need to have these ports opened: 443, 4505, and 4506. Confirm that using telnet, nc, curl, or something like that.

Your Cloud Proxy also need communication with vCenter and ESXi. Take a look on that schemas:

https://docs.vmware.com/en/vRealize-Operations/8.6/com.vmware.vcom.core.doc/GUID-85EC81E1-A40B-4FA3-A105-24A2137D1870.html

Additionally, search for file uaf_bootstrap.log on the client machine. There should be something helpful.

LikeLike

Thanks for pointing me in the right direction!

It was failing because it could not reach the salt master because it couldn’t create a secure channel from client and cloud proxy.

https://docs.vmware.com/en/vRealize-Operations/8.6/com.vmware.vcom.core.doc/GUID-83B814AC-F9C1-4F19-A0CB-0678AE327BC0.html

LikeLiked by 1 person

Great article, was looking for something like this in a single place.

Thank you

LikeLike